Hadoop、Hbase单机环境安装

1. Hadoop安装

1.1 HDFS配置

编辑Hadoop下etc/hadoop/core-site.xml配置

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> <!-- 最好修改临时目录,否则使用默认的/tmp/hadoo-hadoop可能会被系统清理掉 --> <property> <name>hadoop.tmp.dir</name> <value>file:/home/local/data/hadoop/tmp</value> </property> </configuration>

编辑Hadoop下etc/hadoop/hdfs-site.xml配置

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <!-- 和hadoop.tmp.dir配置相关 --> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/local/data/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/local/data/hadoop/tmp/dfs/data</value> </property> </configuration>

编辑Hadoop下etc/hadoop/hadoop-env.sh,指定JAVA_HOME环境变量(可选)

#export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/home/local/jdk1.8.0_231

1.2 HDFS启动

格式化文件系统,执行Hadoop下bin/hdfs

sh hdfs namenode -format

启动hdfs文件系统,执行Hadoop下sbin/start-dfs.sh

sh start-dfs.sh

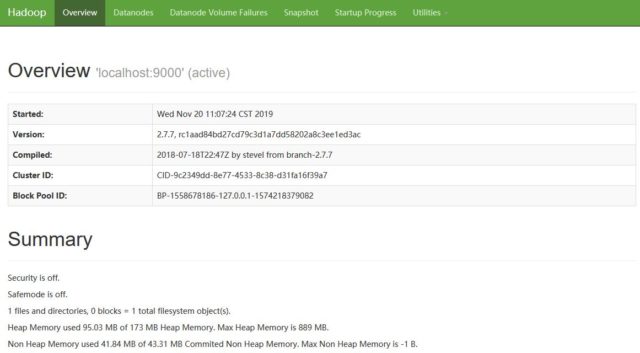

1.3 HDFS验证

控制台输入jps,检查 DataNode、NameNode、SecondaryNameNode、NodeManager、ResourceManager这几个进程是否启动

18642 SecondaryNameNode 18793 Jps 1835 HMaster 18475 DataNode 18349 NameNode

打开Hadoop WebUI 默认端口50070后,访问该地址

firewall-cmd --zone=public --add-port=50070/tcp --permanent firewall-cmd --reload

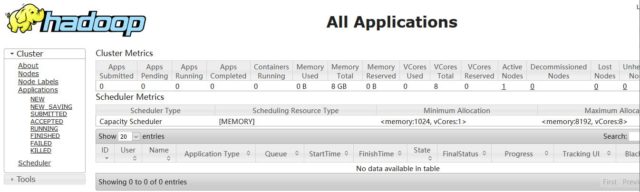

1.3 HDFS Yarn资源管理模块(可选)

编辑Hadoop下etc/hadoop/yarn-site.xml配置

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

执行Hadoop下sbin/start-yarn.sh

sh start-yarn.sh

打开Hadoop yarn 默认端口8088后,访问该地址

firewall-cmd --zone=public --add-port=8088/tcp --permanent firewall-cmd --reload

2. HBase安装

参照HBase 单机版安装启动,需要修改conf目录下hbase-site.xml配置

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>

The mode the clusterwill be in. Possible values are

false: standalone and pseudo-distributedsetups with managed Zookeeper

true: fully-distributed with unmanagedZookeeper Quorum (see hbase-env.sh)

</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/local/data/zookeeper</value>

</property>

</configuration>

修改hbase conf目录下hbase-env.sh,使用hbase自己维护的 zookeeeper

# Tell HBase whether it should manage it's own instance of Zookeeper or not. export HBASE_MANAGES_ZK=true

3. 配置环境变量

# 编辑/etc/profile文件,增加HBase配置 vim /etc/profile # 增加以下配置 # hbase export HBASE_HOME=/home/local/hbase-1.4.11 export PATH=$PATH:$HBASE_HOME/bin # Hadoop export HADOOP_HOME=/home/local/hadoop-2.7.7 export PATH=$PATH:$HADOOP_HOME/bin

4. 配置SSH的免key登陆

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys